During the last weeks I’ve made my mind up about social networking and my ideas of how the next web killer app could look like. Now I want to share some of the rough ideas I have towards this topic.

Location-based and mobile

![]() I strongly believe that the next web killer app will be a location-based service, which combines the expressiveness of social networking with the flexibility of creating virtual spots in the real world and interacting with them. First popular candidates in this area are Foursquare and Gowalla.

I strongly believe that the next web killer app will be a location-based service, which combines the expressiveness of social networking with the flexibility of creating virtual spots in the real world and interacting with them. First popular candidates in this area are Foursquare and Gowalla. ![]() Both systems are very similar: users can define locations, share them with their friends and can check-in at a location to show their friends where they currently are. Actually both systems are games as well: you can „earn“ virtual rewards e.g. by checking-in to many different locations or you can become the mayor of a location by checking-in at this location more often than all other users. This ludic portion combined with applications for the most popular mobile platforms (BlackBerry, Palm Pre, Android, iPhone, …) makes them successful ascenders.

Both systems are very similar: users can define locations, share them with their friends and can check-in at a location to show their friends where they currently are. Actually both systems are games as well: you can „earn“ virtual rewards e.g. by checking-in to many different locations or you can become the mayor of a location by checking-in at this location more often than all other users. This ludic portion combined with applications for the most popular mobile platforms (BlackBerry, Palm Pre, Android, iPhone, …) makes them successful ascenders.

Geocaching is another example for a great location-based service and it’s not just virtual. People can hide little containers (caches) in the environment and publish the coordinates of those caches on the Geocaching website. Other people can check out those coordinates and (in its simplest form) search for the caches at the specified location. In bigger containers there are little things which could be traded and which travel from cache to cache. Thus in contrast to a virtual reward system you get real physical experiences and things to trade. Geocaching animates people to go „back to nature“ and is a real cool movement.

Geocaching is another example for a great location-based service and it’s not just virtual. People can hide little containers (caches) in the environment and publish the coordinates of those caches on the Geocaching website. Other people can check out those coordinates and (in its simplest form) search for the caches at the specified location. In bigger containers there are little things which could be traded and which travel from cache to cache. Thus in contrast to a virtual reward system you get real physical experiences and things to trade. Geocaching animates people to go „back to nature“ and is a real cool movement.

In my opinion location-based services are just at the beginning. With the increasing spread of mobile internet, GPS devices and exact location detection they will emerge and a really new wave of services will come over us. Not everyone will like those services (privacy considerations come in mind), but the world is changing and digital natives are very open-minded to new cool services. Thus I strongly believe that the next web killer app at its core will be social again and will make strong use of mobile and location-based services.

In my opinion the stage is set, but current services can do much better. The next web killer app will not be limited to the ludic approach. It will be more generic and can be used for a broad range of activities, from sharing location-based information and media about private date planning to covering real business scenarios.

„Loc“ as new tune to the music

Let me introduce a new central term for a location-based entity: a loc. The system in my mind completely builds up on those locs. In its simplest form a loc can be seen as a location, but it has more characteristics that justify the need for this new entity:

- A simple loc has a location and an owner.

- A loc contains arbitrary information which can include media (audio/photo/video) and rich text.

- A loc can be of temporary nature and could contain a date/appointment.

- The owner of a loc can give access rights to other users or user groups.

- Locs are interactive: Users can travel to a loc and mark it as visited („Log your Loc“ is a great mantra), they can leave comments and a rating for the loc.

- Users should be able to search for locs in a flexible way and locs should be shown on a map, e.g. as overlay on Bing Maps or Google Maps.

- There could be composed/complex locs that consist of several simple locs.

Characteristics of the new music style

The new location-based system in my mind would completely build on those locs and it will differ from existing applications in some important and innovative ways. I strongly believe in the following characteristics of a location-based app that builds on locs:

- Social community: In our internet world everything is about social. We want to express ourselves, but we want to connect with friends and interest groups as well. A location-based service definitely should take this aspect into account. I should be able to define personal locs and share them with my friends and I should be able to group locs thematically. Thus I should be able to create or join groups which contain a thematic subset of locs. For example there could be a group which collects information about great places in nature. Or what about something like geocaching? There are no limits of fantasy.

- Time limitation and appointments: Dated locs for appointments are a very important feature of the new system and make a big difference to present applications. Dated locs can be used for planning dates, meetings, partys… whatever. That’s a key element in my opinion! It’s relevant for private and social life as well as for business planning and meetings. Imagine you want to meet with some friends. In contrast to write invitation cards you could publish a loc with a date and some further information (photos and more information as rich text) and your friends will be able to easily navigate to the loc by their mobile phones. After the date the loc will be archived and marked as „done“. Time limitation is important as well. Locs with a timespan could be used for temporary information and thus make a real vibrant experience.

- Authorization management: One important aspect that comes with personal locs and with loc groups is authorization. Users should be able to define flexible access rights for their locs, especially for dated locs. They should be able to limit the circle to certain people, all of their friends, groups or the public pool and they should be able to define rights for others (read/comment/modify/full). They should be able to publish a loc in their personal space or in a group, if they want to allocate a loc thematically.

- Contextsensitivity and triggers: Single locs could be just the beginning. A very powerful feature are contextsensitive information. Imagine a check-in at an airport. You start with a first loc which guides you to the check-in counter. After your check-in you’ll get more locs that guide you to duty-free shops and the gateway, for example. On the flight you could get information about your destination and after the flight new locs could pop up for the target airport (baggage pickup, cabstand, rental car, …). That’s just one short example for the power of contextsensitivity. The unlock process for new locs would be based on triggers. E.g. if one loc is logged the next loc could appear and so on. Triggers are great for things like geocaching as well. Imagine you define a trigger that require that you enter a code to log the loc and to activate further locs. This code could be placed in a container you’ve hidden at the target location or could be determined by some riddle which you can only resolve on that location.

- Different loc types: There could be a mass of different locs, each with different characteristics. The simplest form could be a single loc, thus just a single spot. Another type could be a multi loc, which consists of several single locs. Multi locs build a loc chain and it could be possible to unlock subsequent locs by triggers on previous locs. Another loc type could be a live loc, which is basically a single loc and contains dynamic content. This content could come from a feed or another data source and updates frequently. Examples for live locs could be train schedules with information about delays.

- Notifications: Flexible notifications are important to get users informed about new or updated locs. Those notifications could be based on a special thematic (group), on a relationship to a person (friend) or a spatial dependency (in the locality). Users should be able to manage their notifications in a very flexible and easy way, they should be able to define notifications on everything they want.

- Mobile: A social location-based service only makes sense with a social mobile device, which includes location detection via GPS and/or other technologies. Foursquare and Gowalla have done good work here. They include mobile applications for the most popular phone platforms and thus give a great mobile user experience. The future is mobile!

- Integration: In our current world we have a bunch of great social applications like Facebook or Twitter. I believe that for a new social application to succeed it’s very important to integrate those social communities. E.g. messages and locations should be able to be published on my existing social user profiles. Integration of other great services like Bing/Google Maps is very important as well.

Start now!

So when should we start to develop such a new service? The answer: NOW! If you don’t develop it, somebody else will do.

The technology is here, we just have to use it…

Those are my first thoughts on this very interesting topic. But I truely believe in them. What do you think about my sketches of the next web killer app? How would your next web killer app look like? For sure I have further ideas in this area, so feel free to contact me if you are interested in a deeper discussion or in some cooperative work.

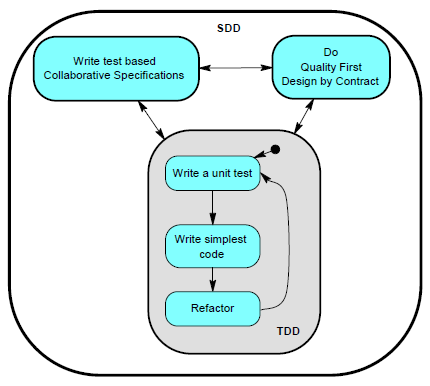

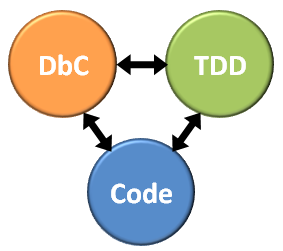

To say first, Test-Driven Development (TDD) and Design by Contract (DbC) have very similar aims. Both concepts want to improve software quality by providing a set of conceptual building blocks that allow you to prevent bugs before making them. Both have impact on the design or development process of software elements. But both have their own characteristics and are different in achieving the purpose of better software. It’s necessary to understand commonalities and differences in order to take advantage of both principles in conjunction.

To say first, Test-Driven Development (TDD) and Design by Contract (DbC) have very similar aims. Both concepts want to improve software quality by providing a set of conceptual building blocks that allow you to prevent bugs before making them. Both have impact on the design or development process of software elements. But both have their own characteristics and are different in achieving the purpose of better software. It’s necessary to understand commonalities and differences in order to take advantage of both principles in conjunction.

In essence, DbC and TDD are about specification of code elements, thus expressing the shape and behavior of components. Thereby they are extending the specification possibilities that are given by a programming language like C#. Of course such basic code-based specification is very important, since it allows you to express the overall behavior and constraints of your program. You write interfaces, classes with methods and properties, provide visibility and access constraints. Furthermore the programming language gives you possibilities like automatic boxing/unboxing, datatype safety, co-/contravariance and more, depending on the language that you use. Moreover the language compiler acts as safety net for you and ensures correct syntax.

In essence, DbC and TDD are about specification of code elements, thus expressing the shape and behavior of components. Thereby they are extending the specification possibilities that are given by a programming language like C#. Of course such basic code-based specification is very important, since it allows you to express the overall behavior and constraints of your program. You write interfaces, classes with methods and properties, provide visibility and access constraints. Furthermore the programming language gives you possibilities like automatic boxing/unboxing, datatype safety, co-/contravariance and more, depending on the language that you use. Moreover the language compiler acts as safety net for you and ensures correct syntax.