Edit: Find an improved implementation of the pattern here.

Edit: Find an explicit implementation of the pattern here.

This blog post shows the Property Memento as pattern for storing property values and later restoring from those. Constructive feedback is welcome everytime!

Problem description

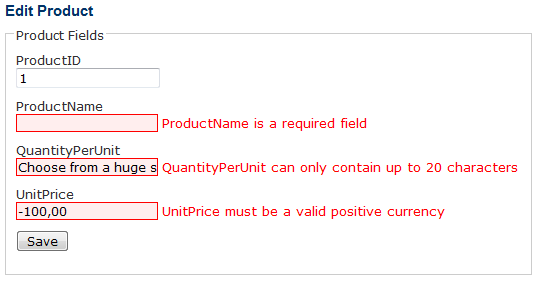

It’s a common task in several scenarios: you want to store the value of an object’s property, then temporarily change it to perform some actions and at the end restore the original property value again. Especially with UI-related task you come into this situation quite often.

The last time I came across with this requirement is only some days/weeks ago and was a UI task as well. I created a generic detail view in WinForms, which was built up by Reflection from an object’s properties and optional metadata information. This form contained a control for the object’s values on the one side and a validation summary control for showing validation messages on the other side. The validation summary had to change its content and layout dynamically while performing validation.

Through the dynamic nature of the form unfortunately I couldn’t use the AutoSize feature and thus had to layout the control manually (calculating and setting control sizes etc.). But I still wanted to use some AutoSize functionality, at least for the validation summary. And here’s the deal: everytime the validation messages on the summary change, the control should AutoSize to fit its content. With the new size I recalculate the Height of the whole form. This task can be done by manually setting the AutoSize property temporarily. Additionally it’s necessary to temporarily set the Anchor property of the details control and the validation summary to complete the layouting process correctly.

Normally in the past I would have used a manual approach like this:

private void SomeMethod(...)

{

int oldValSumHeight = _valSum.Height;

int newValSumHeight = 0;

bool oldValSumAutoSize = _valSum.AutoSize;

AnchorStyles oldValSumAnchor = _valSum.Anchor;

AnchorStyles oldDetailAnchor = _detailContent.Anchor;

_valSum.AutoSize = true;

_valSum.Anchor = AnchorStyles.Left | AnchorStyles.Top;

_detailContent.Anchor = AnchorStyles.Left | AnchorStyles.Top;

_valSum.SetValidationMessages(validationMessages);

newValSumHeight = _valSum.Height;

Height = Height - oldValSumHeight + newValSumHeight;

_valSum.AutoSize = oldValSumAutoSize;

_valSum.Anchor = oldValSumAnchor;

_detailContent.Anchor = oldDetailAnchor;

_valSum.Height = newValSumHeight;

}

What a verbose and dirty code for such a simple task! Saving the old property values, setting the new values, performing some action and restoring the original property values… It’s even hard to find out the core logic of the method. Oh dear! While this solution works, it has a really poor readability. Moreover it’s not dealing with exceptions that could occur when actions are performed in between. Thus the UI could be left in an inconsistently layouted state and presented to the user in this way. What a mess! But what’s the alternative?

The Property Memento

Better solution? What do you think about that (edit: find a better implementation here):

private void SomeMethod(...)

{

int oldValSumHeight = _valSum.Height;

int newValSumHeight = 0;

using (GetAutoSizeMemento(_valSum, true))

using (GetAnchorMemento(_valSum, AnchorStyles.Top|AnchorStyles.Left))

using (GetAnchorMemento(_detailContent, AnchorStyles.Top|AnchorStyles.Left))

{

_valSum.SetValidationMessages(validationMessages);

newValSumHeight = _valSum.Height;

Height = Height - oldValSumHeight + newValSumHeight;

}

_valSum.Height = newValSumHeight;

}

private PropertyMemento<Control, bool> GetAutoSizeMemento(

Control control, bool tempValue)

{

return new PropertyMemento<Control, bool>(

control, () => control.AutoSize, tempValue);

}

private PropertyMemento<Control, AnchorStyles> GetAnchorMemento(

Control control, AnchorStyles tempValue)

{

return new PropertyMemento<Control, AnchorStyles>(

control, () => control.Anchor, tempValue);

}

Notice that the logic of SomeMethod() is exactly the same as in the first code snippet. But now the responsibility of storing and restoring property values is encapsulated in Memento objects which are utilized inside a using(){ } statement. GetAutoSizeMemento() and GetAnchorMemento() are just two simple helper methods to create the Memento objects, which support readability in this blog post, but nothing more…

So how is the Property Memento working conceptually? On creation of the Property Memento it stores the original value of an object’s property. Optionally it’s possible to set a new temporary value for this property as well. During the lifetime of the Property Memento the value of the property can be changed by a developer. Finally when Dispose() is called on the Property Memento, the initial property value is restored. Thus the Property Memento clearly encapsulates the task of storing and restoring property values.

The technical implementation of the Property Memento in C# uses Reflection and is shown below (edit: find a better implementation here):

public class PropertyMemento<TClass, TProperty> : IDisposable

where TClass : class

{

private readonly TProperty _originalPropertyValue;

private readonly TClass _classInstance;

private readonly Expression<Func<TProperty>> _propertySelector;

public PropertyMemento(TClass classInstance,

Expression<Func<TProperty>> propertySelector)

{

_classInstance = classInstance;

_propertySelector = propertySelector;

_originalPropertyValue =

ReflectionHelper.GetPropertyValue(classInstance, propertySelector);

}

public PropertyMemento(TClass memberObject,

Expression<Func<TProperty>> memberSelector, TProperty tempValue)

: this(memberObject, memberSelector)

{

SetPropertyValue(tempValue);

}

public void Dispose()

{

SetPropertyValue(_originalPropertyValue);

}

private void SetPropertyValue(TProperty value)

{

ReflectionHelper.SetPropertyValue(

_classInstance, _propertySelector, value);

}

}

This implementation uses the following ReflectionHelper class:

static class ReflectionHelper

{

public static PropertyInfo GetProperty<TEntity, TProperty>(

Expression<Func<TProperty>> propertySelector)

{

return GetProperty<TEntity>(GetPropertyName(propertySelector));

}

public static PropertyInfo GetProperty<T>(string propertyName)

{

var propertyInfos = typeof(T).GetProperties();

return propertyInfos.First(pi => pi.Name == propertyName);

}

public static string GetPropertyName<T>(

Expression<Func<T>> propertySelector)

{

var memberExpression = propertySelector.Body as MemberExpression;

return memberExpression.Member.Name;

}

public static TProperty GetPropertyValue<TEntity, TProperty>(

TEntity entity, Expression<Func<TProperty>> propertySelector)

{

return (TProperty)GetProperty<TEntity, TProperty>(propertySelector)

.GetValue(entity, null);

}

public static void SetPropertyValue<TEntity, TProperty>(TEntity entity,

Expression<Func<TProperty>> propertySelector, TProperty value)

{

GetProperty<TEntity, TProperty>(propertySelector)

.SetValue(entity, value, null);

}

}

As you can see, the Property Memento implementation is no rocket science. The constructor gets the class instance and an Expression which selects the property from this instance. Optionally you can provide a property value that should be set temporarily in place of the original value. Getting and setting the property value is done via the ReflectionHelper class. For the sake of shortness the implementation doesn’t have a good error handling mechanism. You could employ your own checks if you want. What I like is the use of generics. This eliminates many error sources and guides a developer with the usage of the Property Memento.

Really a pattern and a Memento?

I’ve used the Property Memento now in several different situations with success and thus I think it’s justified to call it a pattern.

But is it a Memento as well? Wikipedia says about the Memento pattern:

„The memento pattern is a software design pattern that provides the ability to restore an object to its previous state […]“

And this is true for the Property Memento, where the „object“ equals a property value on a class instance! The „previous state“ is stored on creation of the Memento and „restore an object“ is done on call of Dispose().

Finally

This blog post has shown the Property Memento as pattern for storing original property values and later on restoring them.

Compared to the „manual way“ the Property Memento has valuable advantages. It encapsulates the responsibility of storing an original value and restoring it when the using() block is finished. Client code remains clean and is condensed to the core logic, while delegating responsibilities to the Property Memento by an explicit using(){ } block. Thus the code becomes much more concise and readable. Last but not least the Property Memento employs an automatic exception handling mechanism. If an exception occurs inside a using() block of the Property Memento, finally the Dispose() method is called. Thus the Property Memento restores the original property state even in exceptional situations and the user gets a consistent layouted UI.