On PDC 2008 Ray Ozzie went out on a limb by saying that Windows Azure will be „setting the stage for the next 50 years of systems“. Everyone (me too) was excited about this new technology and people got inspired by Microsoft’s vision and its new cloud computing platform.

On PDC 2008 Ray Ozzie went out on a limb by saying that Windows Azure will be „setting the stage for the next 50 years of systems“. Everyone (me too) was excited about this new technology and people got inspired by Microsoft’s vision and its new cloud computing platform.

16 months later, here we are: Windows Azure is live, the platform has been consolidated (R.I.P., Live Framework…) and data centers have been built up around the world.

But is Windows Azure this game changer that Microsoft promised and which they bet on? Will Windows Azure (as product) succeed? I’m not sure, but let’s see…

First things first

First things first

My thoughts on this topic have a certain background. In 2009 my company SDX invested significant research time into the innovative areas of cloud computing in general and Windows Azure in particular. And we’re still moving forward in this area. In scope of the NewCloudApp() contest we made up a little showcase named Rating Stress Simulator which you can see on the right and which you can try out now on Windows Azure.

On the architectural side we tried to use many of the possibilities offered by Windows Azure: WebRole, WorkerRole, message queue, table storage, WCF service hosted in the WebRole, …

We gathered some experiences with Windows Azure, both on the technical and on the business side. We find the platform very promising and we believe that it’s the best cloud platform on the market from a technical point of view. It gives great flexibility for developers while still utilizing their existing technical skills with .NET, Visual Studio, SQL Server and others.

Costs always matter!

While we see a great platform we’re also unsure if we should bet on Windows Azure. The main reason for this are the fixed costs for idle hosting. This means the costs for just holding our application online and running without any user on it. For this task our simple application with two roles, a message queue and table storage (no SQL DB included!) has monthly costs of about 130€ (~177$)! The main costs come from the two running roles and I’m gonna tell you: we aren’t happy with the current situation.

And we’re not alone with our criticism. Windows Azure costs are highly debated these days and to make hosting small applications on Azure less expensive is the no. 1 request of developers who voted on the My Great Windows Azure Idea website. Several blog posts and discussions run into the same direction. When people realized that Azure computation costs are based on wall clock time and not on real CPU time they equally realized that hosting on Windows Azure is ridiculously expensive compared to other options on the market.

Let’s do another calculation example for idle costs. Let’s imagine a little start-up application with 2 roles (small instance), 1 Web DB and 1 AppFabric Service Bus connection, up and running all the time and waiting there to be used. This scenario leads to monthly costs of about 137€ (~193$), which results in costs for 1 year of 1610€ (~2270$)! Those idle costs are fixed costs for this scenario which outline the entrance barrier for just holding the application online without any traffic. Isn’t one of the basic ideas of cloud computing to keep the fixed costs low and transform them into variable costs? At least Windows Azure doesn’t follow this idea or not on a reasonable scale… Hence it isn’t attractive for start-ups and little companies which could buy and run a server on their own for these costs and get full flexibility.

Competitors

But what about the offers of other companies and their attractivity? I’ll start with shared hosting as possibility to outsource infrastructure and application hosting. Shared hosting is many times cheaper in comparison to Windows Azure. For sure Windows Azure offers additional values: deployment, management, scalability. But the point is that for most people who are interested in Azure those values don’t matter much. For most people, companies and their applications those qualities are not nearly justifying the higher costs of Azure hosting compared to shared hosting.

But let’s come to two ‚real‘ cloud providers: Google with its AppEngine offers a certain amount of free quotas and in measuring computation costs they got the right idea. Google charges only for the actual consumption of CPU time (in comparison to the wall-clock-time-based model of Windows Azure), thus you are not charged for idle time of your processes.

But let’s come to two ‚real‘ cloud providers: Google with its AppEngine offers a certain amount of free quotas and in measuring computation costs they got the right idea. Google charges only for the actual consumption of CPU time (in comparison to the wall-clock-time-based model of Windows Azure), thus you are not charged for idle time of your processes.

Amazon with its EC2 calculates on a wall clock time basis, but here you have the full flexibility of arming your VMs with everything you want.

Amazon with its EC2 calculates on a wall clock time basis, but here you have the full flexibility of arming your VMs with everything you want.

And then there’s Microsoft with Windows Azure which doesn’t include the benefits of both models. On the one side Windows Azure charges you on a wall clock time basis and thus you’re paid for idle time of your applications as well. On the other side Windows Azure is VM-based where every „role“ depicts an application fragment that maps 1:1 to a VM. The serious drawback here is that you are not able to host more than 1 role in a single VM instance. Thus you don’t get any of the flexibility of Amazon’s EC2 VMs.

Microsoft should revise this role/VM-based scaling model and/or the wall clock time basis. With the current model it’s not attractive for hobby developers, start-ups and smaller companies. The entrance barrier is ways too high and the scalability is too limited. Why should any small company use Windows Azure over AppEngine or Ec2? I don’t know…

But what are the implications of not attracting hobby developers, start-ups and little companies? Perhaps we could learn from the past, so let’s take a look in history…

A brief look in history

Some days ago an older colleague of mine told me from the rise of Windows and the fail of OS/2 Warp (this was before my active computer time, so it was interesting to hear this story from ancient ages…). He told me that OS/2 had striking features on the technical side in comparison with Windows, but it couldn’t win the OS war. It was beaten by Windows and died a slow and painful death…

Some days ago an older colleague of mine told me from the rise of Windows and the fail of OS/2 Warp (this was before my active computer time, so it was interesting to hear this story from ancient ages…). He told me that OS/2 had striking features on the technical side in comparison with Windows, but it couldn’t win the OS war. It was beaten by Windows and died a slow and painful death…

One reason he sees for this is the poor attraction of hobby developers and the inability to build up a big developer community around OS/2. Windows came with a cheap compiler which enabled everyone to write his own Windows software. Microsoft was able to attract hobbyists which resulted in a huge software pool of shareware, freeware etc. and yielded a large developer community and knowledge base to develop applications for Windows. OS/2 Warp failed in this case: the IBM compiler was expensive (> 1000 Deutschmark, that’s about 650$ in the year 1990) and even while the platform and technical features were great, nobody was attracted to develop against it due to the high entrance barrier of development costs. OS/2 couldn’t generate a critical mass of developers, development knowledge and subsequently software as output for the users.

Hobby developers matter!

I don’t get around to see parallels between OS/2 and Windows Azure on the costs side. Microsoft should learn from history and should prevent mistakes that have been done by others before. It’s crucial to attract a broad range of developers to use Windows Azure for expressing their ideas and building the next wave of killer applications. With the current costs just very few developers will be attracted to make experiences with the platform. But that’s a big mistake!

By bringing Windows Azure to hobby developers and smaller companies, Microsoft would open heavy doors to the real business. Developers and architects would take their experiences with the platform from their personal lifes to the business and would promote Windows Azure when asked for it or when to decide for the platform of a new application. Accordingly the entrance barrier even for bigger companies would be ways lower: developers who have gained experience with the platform would be able to spread their knowledge to colleagues. This builds up a chain reaction and an exponential growing amount of developers would use the platform. It would be the best promotion for Windows Azure that Microsoft could get.

For today because of the costs not many developers will suggest Windows Azure with good conscience and that’s a pitty. Please, Microsoft: Community matters! Realize the potential of your platform and the implications that come with the amount of hobby developers who are using Windows Azure.

The need for a „Mini Azure“ offer

One suggestion follows: realize a „Mini Azure“ package as new offer on your Azure platform. Low costs (<10$ per month), few resources, no dedicated machines, weak SLAs, but online 24×7 – just being there to get people started. Remove this high entrance barrier or you will go the OS/2 way down the road. Your great platform leads you nowhere and will not succeed, if you don’t have people who use it…

Mini Azure has been suggested by Jouni Heikniemi before, so take a look at what he and others say.

Conclusion

To summarize things: if nothing changes I’m currently afraid that Microsoft fails with Windows Azure, at least I fear it will be a non-starter. And that’s a bad basis for future development. At the moment Microsoft scares people away and they will meet problems to get them back. I say it again: I think they have a very good development platform. But the best platform doesn’t lead them anywhere if it’s too expensive for people to use this platform.

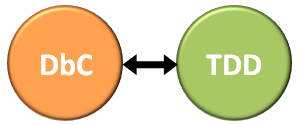

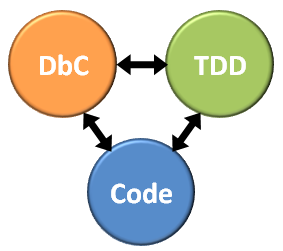

To say first, Test-Driven Development (TDD) and Design by Contract (DbC) have very similar aims. Both concepts want to improve software quality by providing a set of conceptual building blocks that allow you to prevent bugs before making them. Both have impact on the design or development process of software elements. But both have their own characteristics and are different in achieving the purpose of better software. It’s necessary to understand commonalities and differences in order to take advantage of both principles in conjunction.

To say first, Test-Driven Development (TDD) and Design by Contract (DbC) have very similar aims. Both concepts want to improve software quality by providing a set of conceptual building blocks that allow you to prevent bugs before making them. Both have impact on the design or development process of software elements. But both have their own characteristics and are different in achieving the purpose of better software. It’s necessary to understand commonalities and differences in order to take advantage of both principles in conjunction.

In essence, DbC and TDD are about specification of code elements, thus expressing the shape and behavior of components. Thereby they are extending the specification possibilities that are given by a programming language like C#. Of course such basic code-based specification is very important, since it allows you to express the overall behavior and constraints of your program. You write interfaces, classes with methods and properties, provide visibility and access constraints. Furthermore the programming language gives you possibilities like automatic boxing/unboxing, datatype safety, co-/contravariance and more, depending on the language that you use. Moreover the language compiler acts as safety net for you and ensures correct syntax.

In essence, DbC and TDD are about specification of code elements, thus expressing the shape and behavior of components. Thereby they are extending the specification possibilities that are given by a programming language like C#. Of course such basic code-based specification is very important, since it allows you to express the overall behavior and constraints of your program. You write interfaces, classes with methods and properties, provide visibility and access constraints. Furthermore the programming language gives you possibilities like automatic boxing/unboxing, datatype safety, co-/contravariance and more, depending on the language that you use. Moreover the language compiler acts as safety net for you and ensures correct syntax.